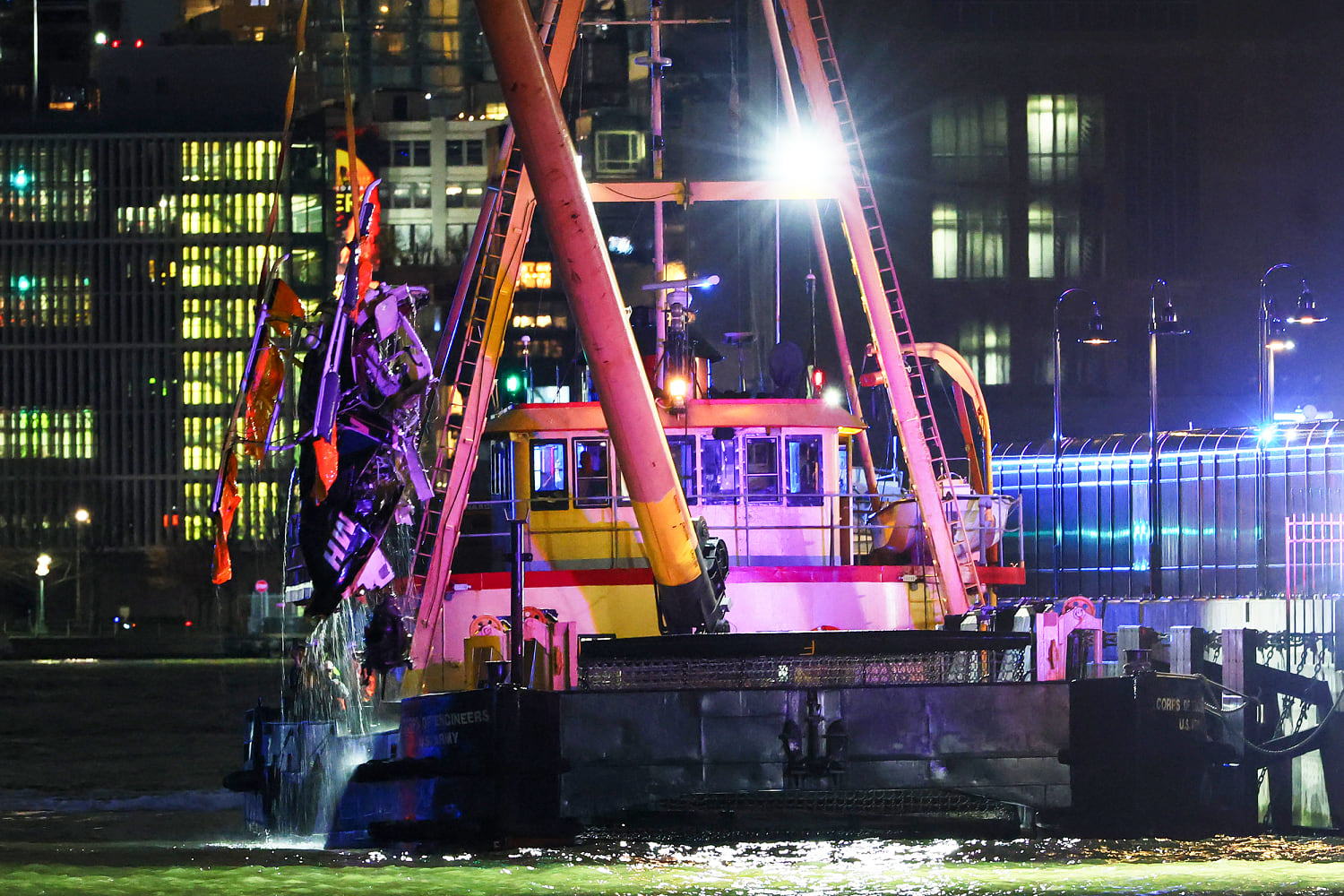

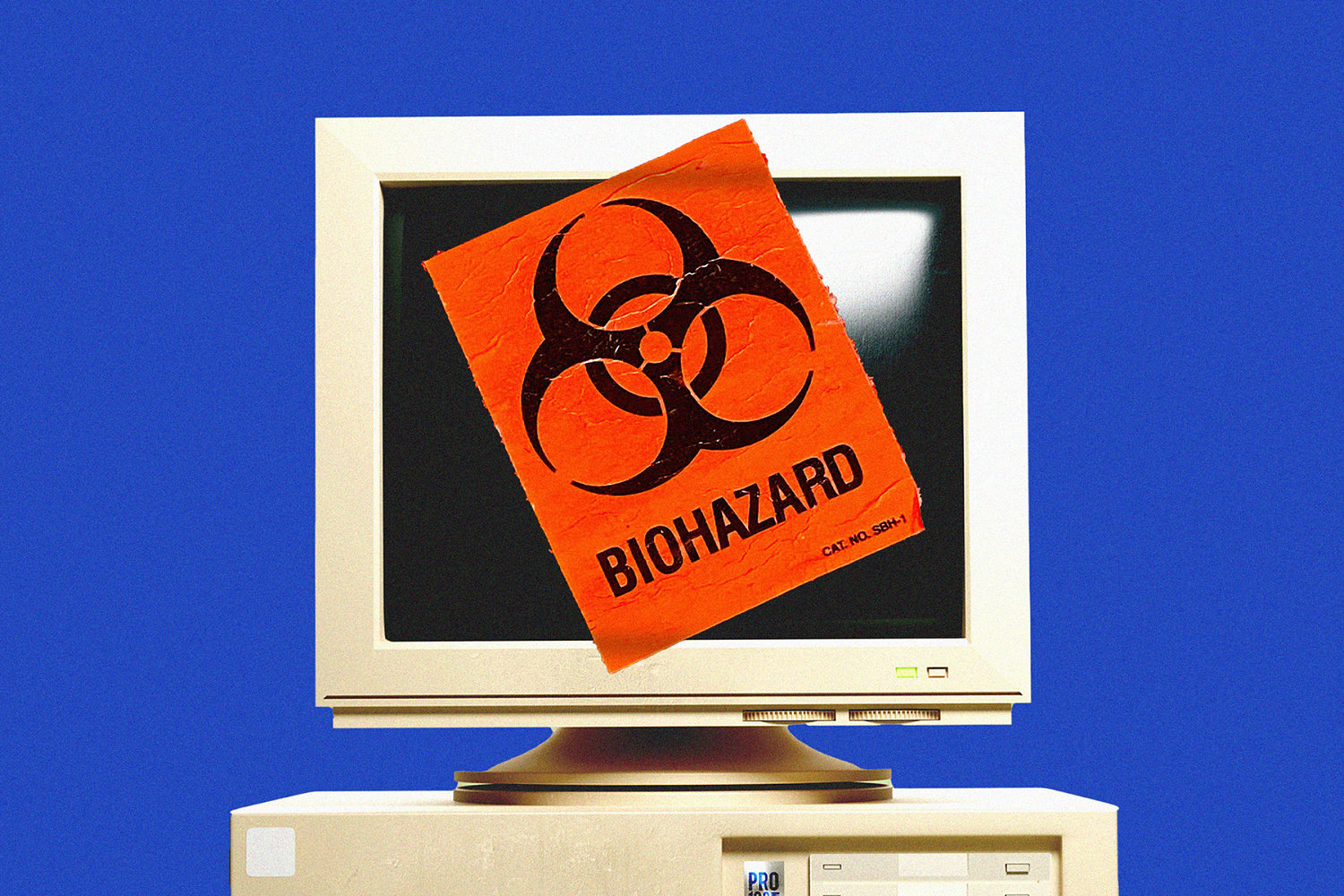

ChatGPT safety systems can be bypassed to get weapons instructions

NBC News found that OpenAI’s models repeatedly provided answers on making chemical and biological weapons.

NBC News found that OpenAI’s models repeatedly provided answers on making chemical and biological weapons.

What's Your Reaction?